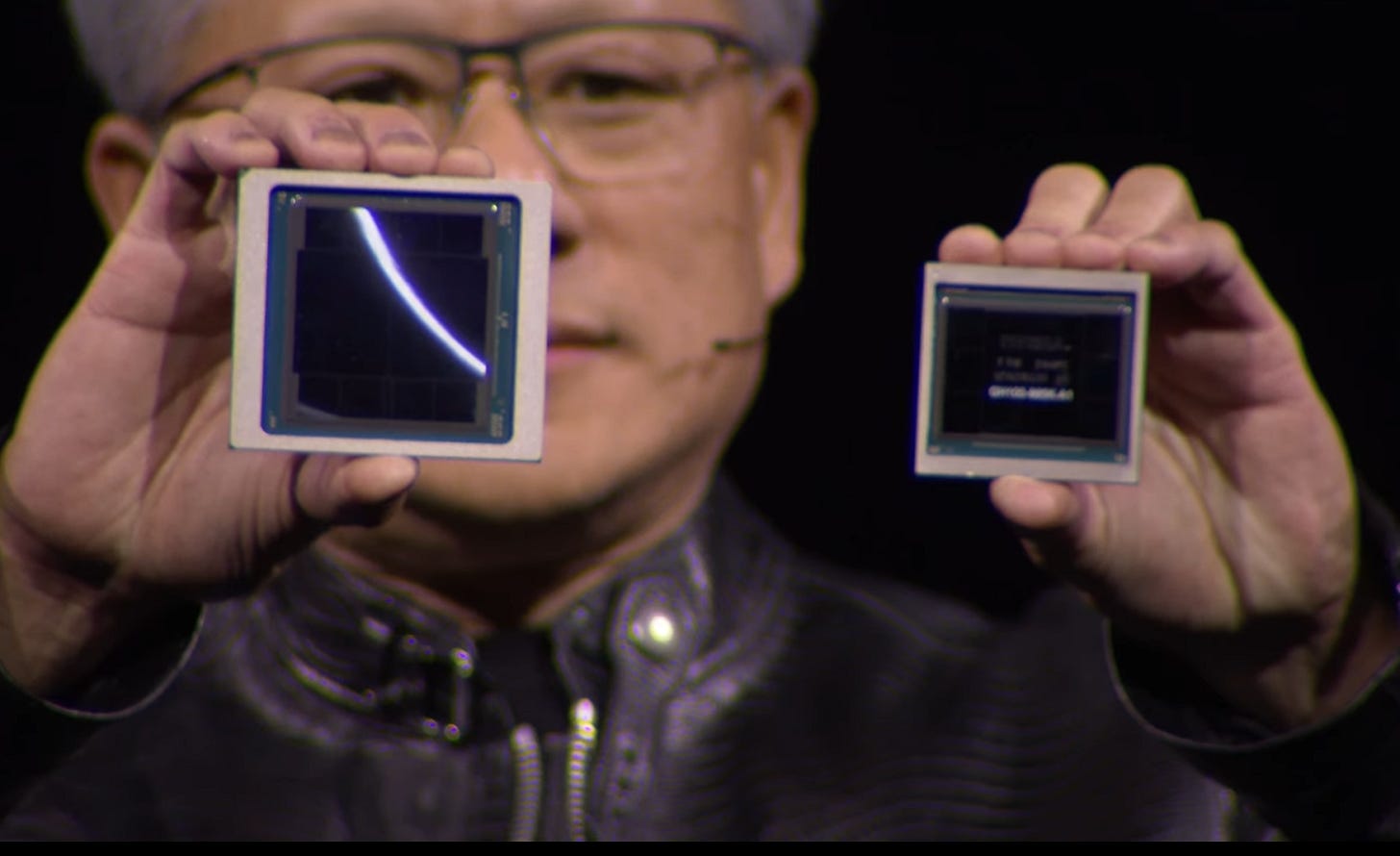

For semiconductors, this decade is all about vertical integration—stacking chiplets on top of each other and stacking transistors, and that’s great for their performance, but very problematic for cooling.

The heat problem

We’ve managed to continuously improve performance by packing more and more transistors into the size of a chocolate square. But now, there are so many transistors that they cannot all be used at once without the chip overheating. How much heat a chip dissipates is measured in so-called TDP, which stands for thermal design power. It’s based on the maximum heat flux that can be removed from a chip.

If we take NVIDIA’s H100 GPU, it’s around 700W TDP. The latest NVIDIA Blackwell GPU dissipates about 1kW of heat. One of the problems with such powerful chips is that while it performs operations, about half of this chip is dark silicon.

Dark silicon is a phenomenon where a significant portion of the transistors on the chip cannot be computing at the same time due to power and thermal constraints.

What makes it even worse is the ongoing vertical integration. It all started by stacking chiplets, smaller pieces of chips that have their own function, on top of each other. That’s great for their performance but very problematic for cooling. The same trend is happening with the chip’s building blocks - transistors - as we’re now transitioning towards stacking nanosheets vertically. Well, what are we going to do with all the additional heat that will come as a result of this?

Keeping it cool

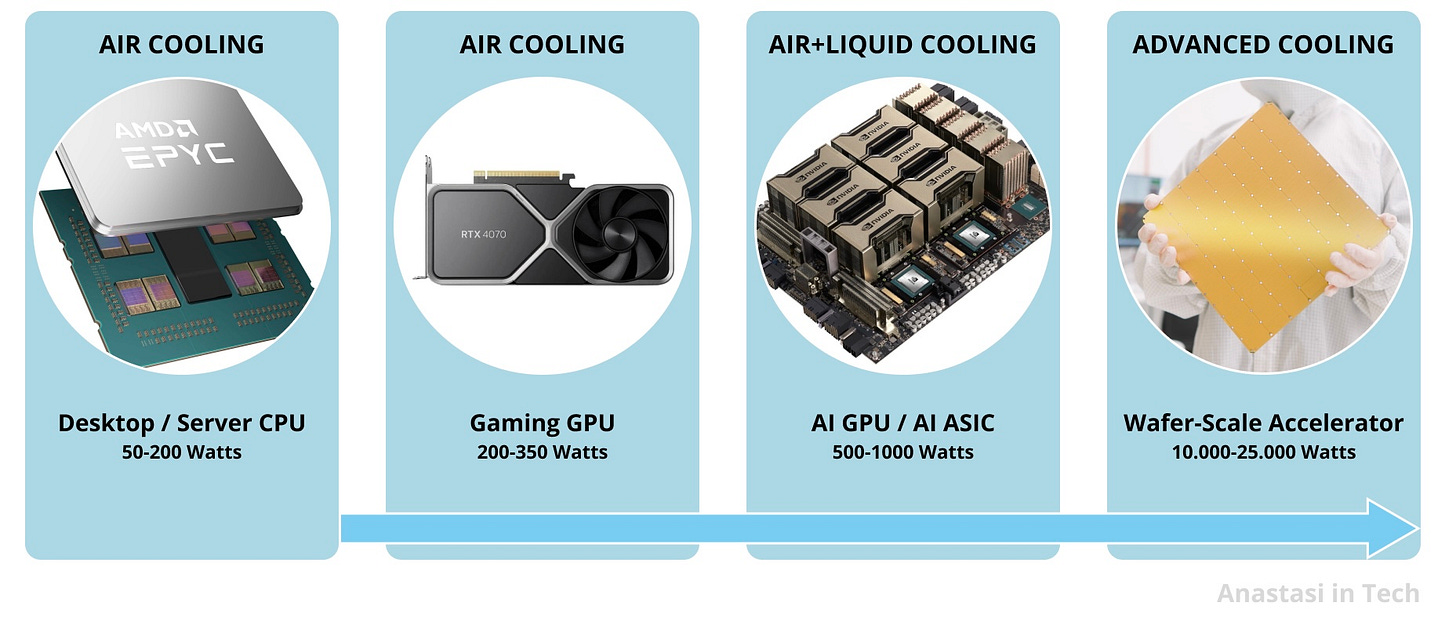

There are many ways to keep chips cool. Typically, what we used to do is conduct this heat out somewhere and then dissipate it. But by far, the most popular solutions involve cooling with air or liquid. Cooling with air works for some desktop chips and some server processors like AMD’s EPYC Milan CPU, which dissipates about 280W.

However, somewhere around 300W TDP, we reach the limit of how much we are able to cool with air alone, and above that, we have to switch to liquid cooling, which can conduct up to 3,000 times more heat than air can. This works, for example, with NVIDIA GPUs like A100 and H100, which can dissipate up to 1000 Watts of heat.

Cooling the Hottest Chips

Eventually, we end up guiding as much heat as we can to heat sinks or cold plates. In any case, even heat sinks have limits. So, if we want to cool down something like a DOJO training tile that dissipates up to 15,000W of heat, or when we cool down Cerebras’ Wafer Scale Engine that dissipates up to 25,000W, clearly we need to use something much more advanced. I explained how it’s done in this video.

Now, air or liquid cooling is what we typically think of when we think of cooling. But actually, work on cooling starts during the process of designing chips. When I was working as a chip designer, already during the physical design phase, we consider the most thermally-aware ways of placing the blocks and cells on the chip. We also have to keep in mind the switching activity of the blocks, as some of the chip’s parts will be switching more than others. So we place these blocks in a way that minimizes both the peak temperature as well as the temperature gradients across the chip.

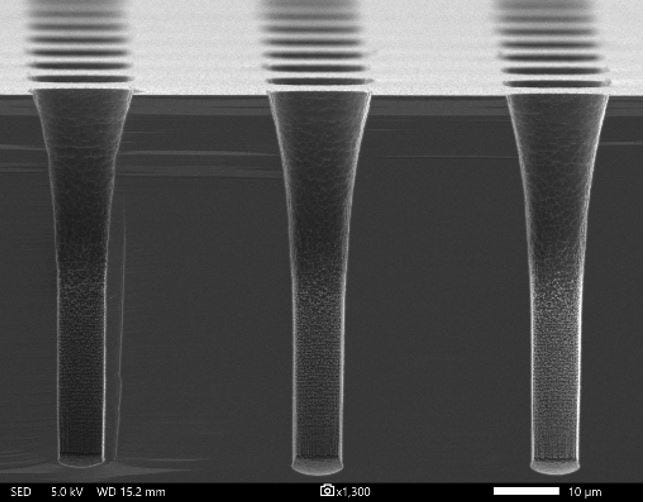

But that’s usually not enough, so for 3D chips, we have to create heat corridors by placing TSVs in a particular way to help spread the heat evenly. TSVs are through-silicon vias, which are copper connections that travel through the silicon die. These are used to connect chiplets in designs like AMD’s MI300, which has 13 chiplets stacked together and acts as one single chip, and Intel's Ponte Vecchio GPU. Also, with TSVs, we provide vertical and lateral pathways for heat dissipation. So they do help to guide some heat away, but it’s not a silver bullet, unfortunately.

Recent Cooling Innovations

Now, it's clear that we will see more powerful chips and AI ASICs coming in the next few years. Managing the heat produced by these chips is going to be one of the biggest challenges in electronics going forward. Already, we now have to go for immersion cooling and have sunk whole racks into tanks of liquid. The next step is obviously to bring this fluid even closer to the source of that heat—closer to the transistors.

Just think for a moment how efficient it would be if coolants flowed inside processors!

This idea is called embedded cooling—when we bring the cooling to the interior of the silicon, super close to the computing cores that are running the job. This helps to light up those dark silicon parts that we discussed earlier, giving a strong boost to chip performance. I explain how it works in every detail in this video. Watch it now:

According to the paper, it improves the cooling efficiency by a factor of at least 50. This cooling technology is so cool, that is has its own fan club 😂. If you enjoyed this post, please share it with your friends and on social media. Thanks a lot!

While we’re talking about Hot Chips today - the Hot Chips Conference will take place at Stanford University between August 25 to 27. There will be discussions about AI in chip design, all the recent advances in Hot Chips and the cooling technologies of the future. Check it out and register here. I’ll be attending it in person. If you are there, please come and say hi.

Love your articles and YouTube videos.

There is still the issue of dissipating the waste heat. = Keeping the coolant cool. Maybe we should be using the waste heat to heat building and homes. I remember steam tunnels in cities to heat buildings in cities. This would just be a different heat source than burning fuels to produce steam, or more practical - hot water.

On a small scale I looked at ads for gamer computers with liquid cooling. The seem to have multiple fans to cool the liquid and dissipate the heat.

Hey, this is Frank, and I am having an automotive perspective on cooling. What I learned: In automotive historically, engineers design against the max Junction Temperature Tj. In many cases this was 125°C, in some special applications it goes up to 165°C. Chips need to resist to a certain temperature over lifetime profile ... which needs to be calculated carefully that it can fulfill the requirement of an automotive live (e.g. 15000h of operation). But things are changing here also! With the higher and higher performant chips, especially in infotainment and ADAS there are challenges ahead. What I learned the hard way: It is not only dark silicon which makes it complicated, but also leakage currents, which are highly dependent (exponentially) on temperature. So my recommendation is:

Cool it baby, cool it!

There was light at the end of the tunnel when electrification of vehicles became more and more a thing. In EVs there is almost every time a good possibility to "attach" to a liquid cooling system. There are even sophisticated intelligent systems in the EVs (e.g. Tesla Octovalve) which balance temperature "needs" between systems as much as possible. This results in better temperature over lifetime profiles & less TDP which is good for the silicon in terms of lifetime, reliability and surprisingly also for safety (FIT rates also are calculated based on temperature profiles).

Unfortunately, especially in Europe and US OEM are stepping back from 100% electrification by 203x and so all goes south what looked so bright. The vehicle platforms will continue to support both electric motors and internal combustion engines. The worst case influx liquid temperature at your cooling plate is ~70°C ... thanks to the ICE ...