Probabilistic Computing: The Next Frontier

New approach reportedly allows for 100 Million times better energy efficiency compared to the best Nvidia GPUs.

Analog computers once dominated the world, but they were complex, noisy, and inaccurate. In the early 60s, we shifted to digital chips—precise, deterministic, and powerful. This technology is now approaching its physical limits. We’re actually at the brink of yet another paradigm shift: probabilistic computing or as some call it, thermodynamic computing.

This new technology completely flips the script. Instead of fighting noise, which we have done for the past 60 years, we are embracing the noise, harnessing it for computing.

What is Probabilistic Computing

Modern classical computers are made up of transistors that are deterministic, very precise objects. They operate by switching between two states: 0 and 1. This binary system powers nearly all modern computational tasks.

However, the world isn’t binary: nature, financial markets, and even artificial intelligence operate probabilistically. Forcing deterministic machines to solve probabilistic problems is both energy-intensive and slow.

Already in 1982, Richard Feynman suggested that rather than forcing traditional computers, which are deterministic

“…to simulate a probabilistic Nature, …we need to build a new kind of computer, which itself is probabilistic, .. in which the output is not a unique function of the input”.

In other words, instead of forcing classical computers to mimic probability, why not use probabilistic systems to tackle probabilistic problems directly?

So about 40 years later, researchers from MIT, the University of California, Santa Barbara, Stanford and startups like Normal Computing and Extropic all started working on building probabilistic computers.

Probabilistic computing is a very interesting concept: it’s sort of bridging the gap between classical and quantum approaches. It works at room temperature and uses devices that harness the noise from the environment and amplify it.

The Building Blocks: P-Bits

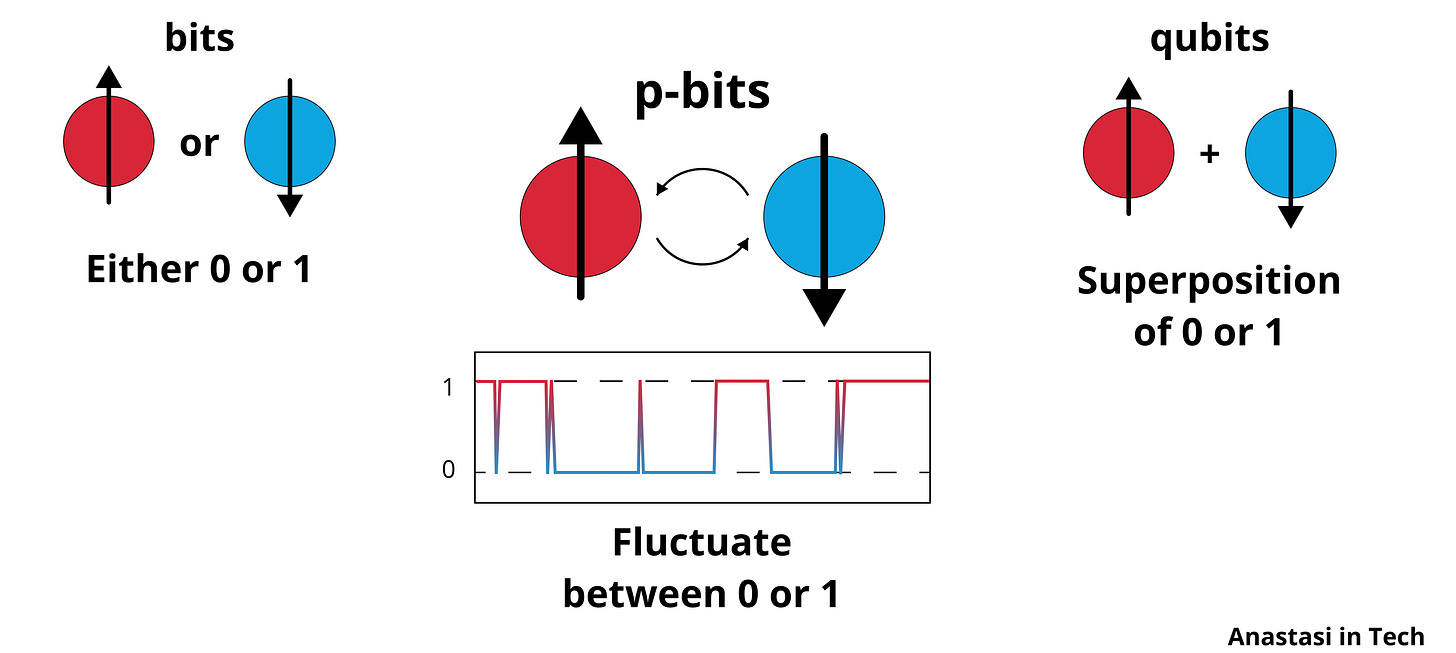

We all know the foundation of modern computing: bits—0s and 1s, which CPUs and GPUs use to perform logical operations. On the other end of the spectrum, we have qubits. These are quantum bits, existing in a superposition of 0 and 1 simultaneously, and they are the foundation of quantum computing.

Now, in the middle of these two are p-bits - probabilistic bits, which designed to fluctuate between 0 and 1 in a purely classical non-quantum manner. They naturally oscillate between states due to thermal energy. I explain in detail how a probabilistic computer works in this video.

Boltzmann law

The foundation of the probabilistic computing is the Boltzmann law, which describes how particles, such as atoms or molecules, distribute themselves in a system. Essentially, this law states that particles are more likely to occupy lower-energy states than higher-energy ones, and we can use this principle to compute. We can harness this law to find the most probable states for a given system, allowing them to perform complex, probabilistic calculations - and the answer is found in equilibrium.

Thermodynamic computer

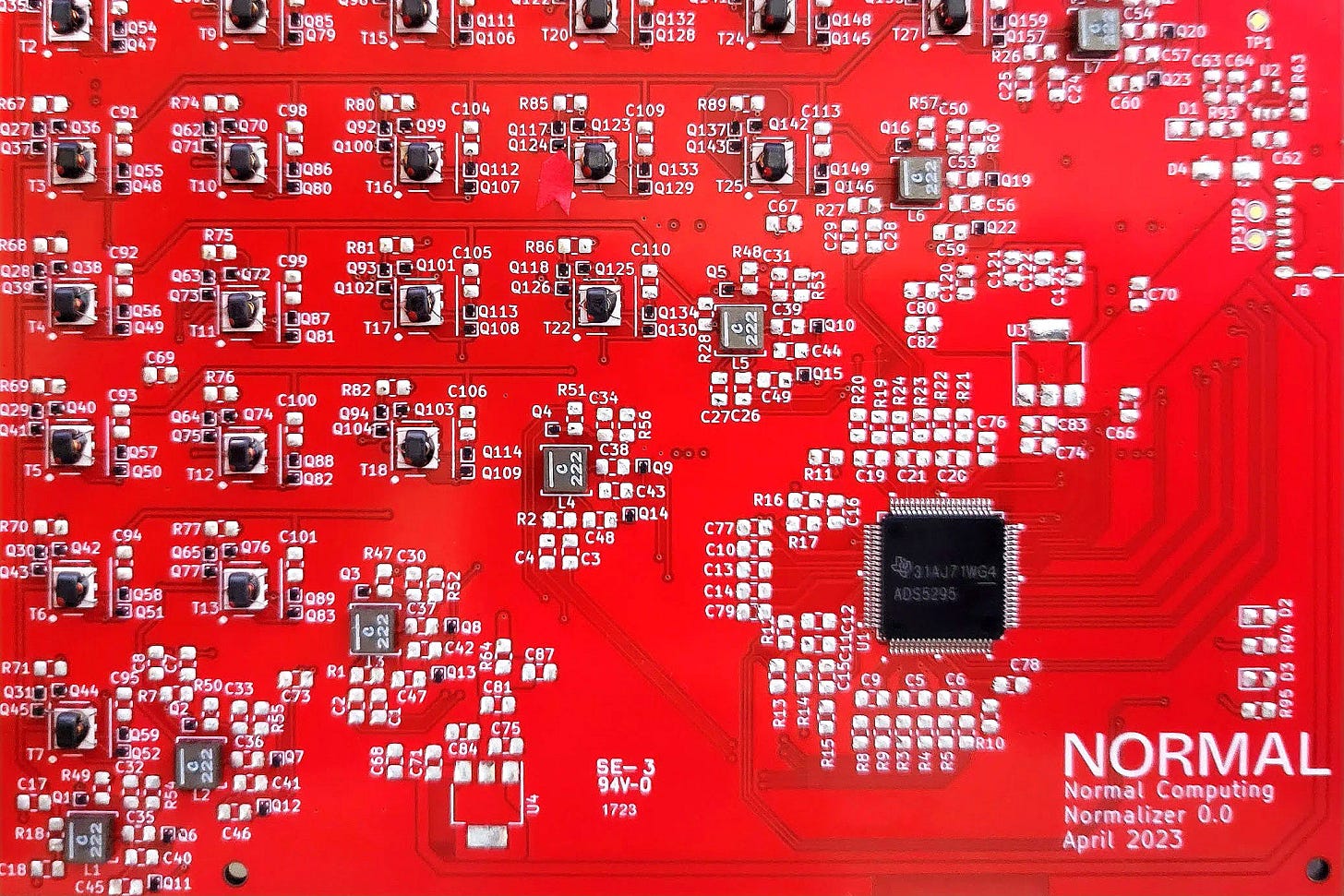

This approach has been gaining serious momentum recently. You may have heard about the hot new startups Extropic and Normal Computing, who are building a thermodynamic computer.

Extropic is building a thermodynamic computer and creating energy-based models that perform computation through heat dissipation. The working principal is primarily based on the second law of thermodynamics which states that in any natural process, the total entropy of an isolated system always increases. Just as we discussed before, they are leveraging the system's natural tendency to minimize energy as a computational resource to perform computations as a physical process.

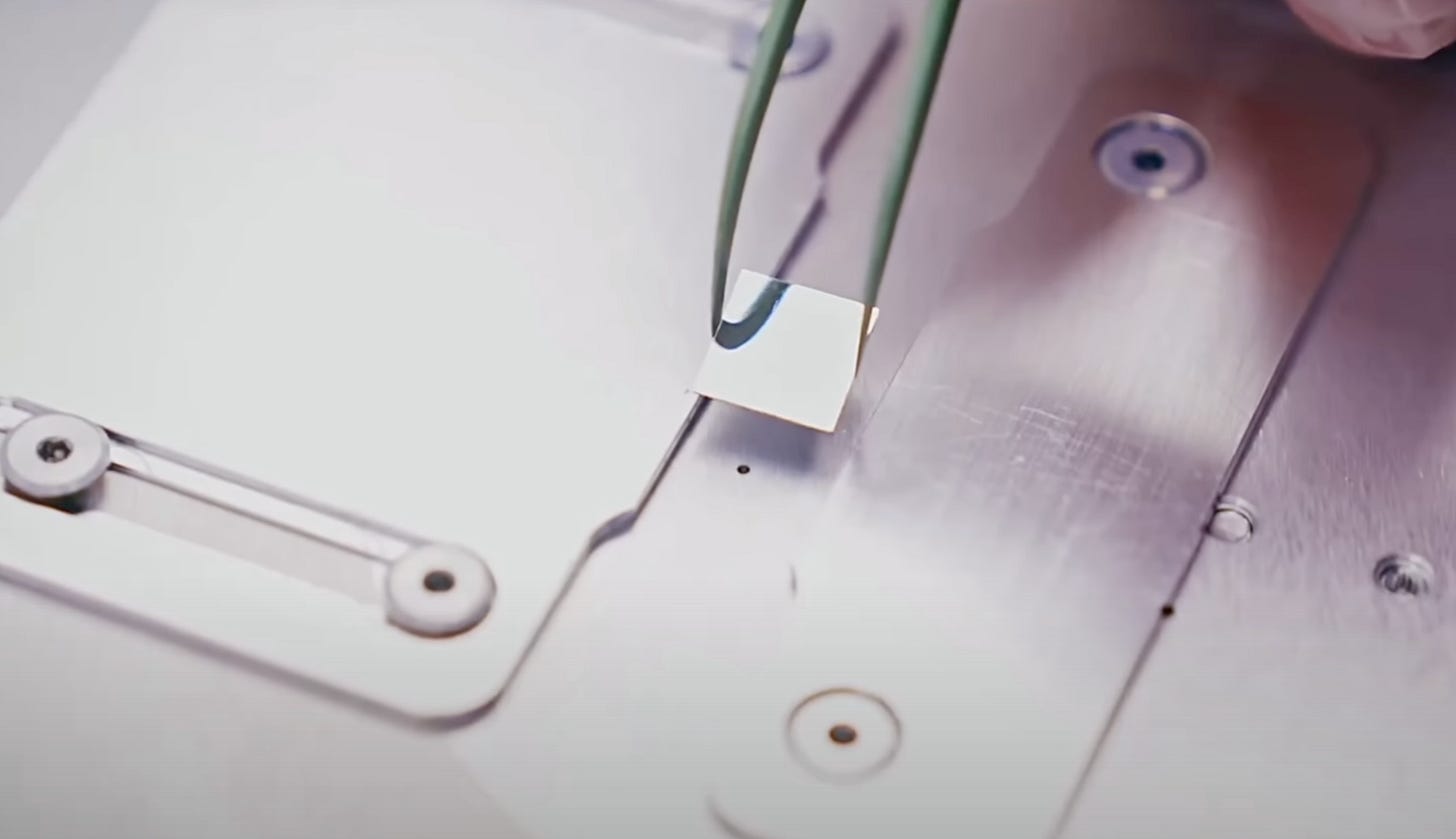

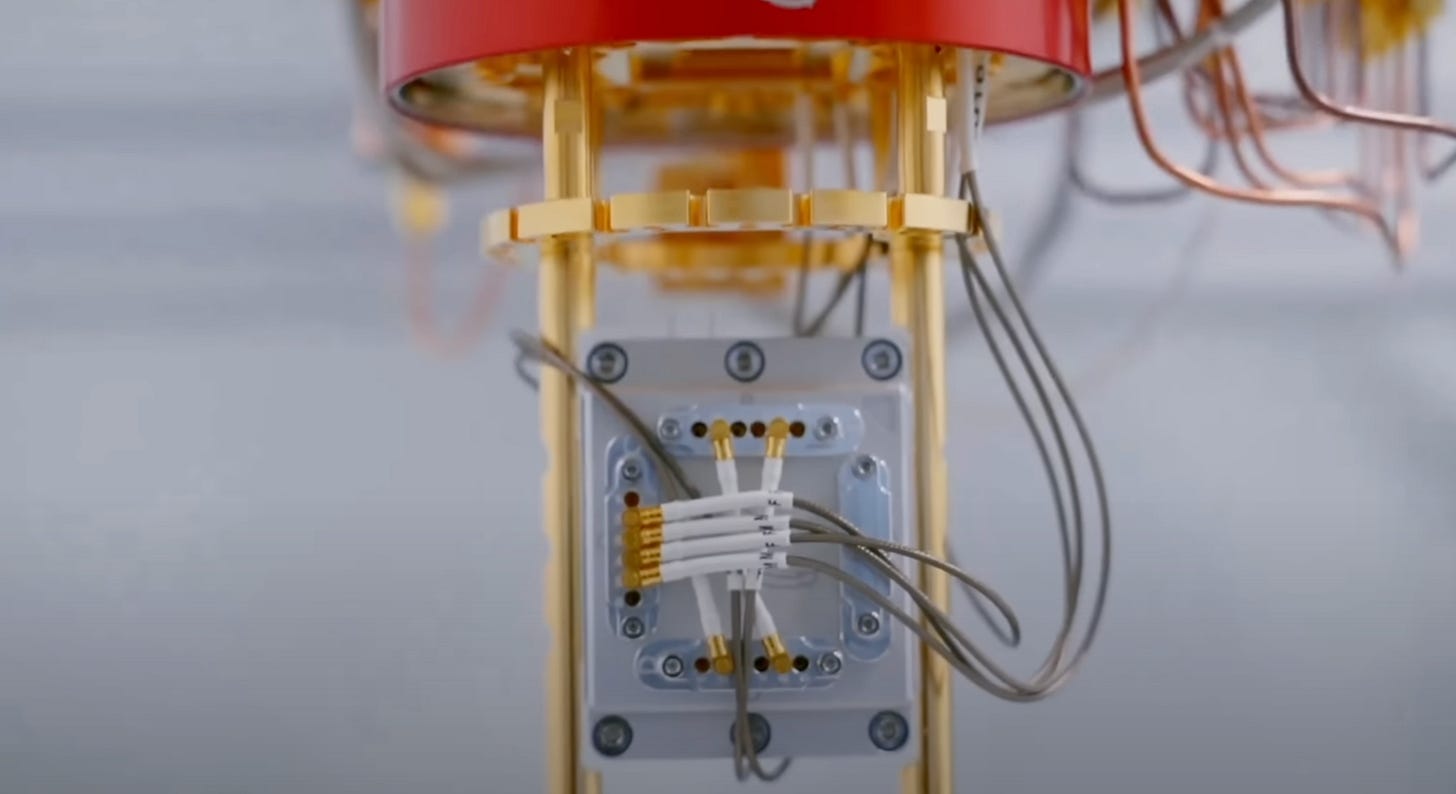

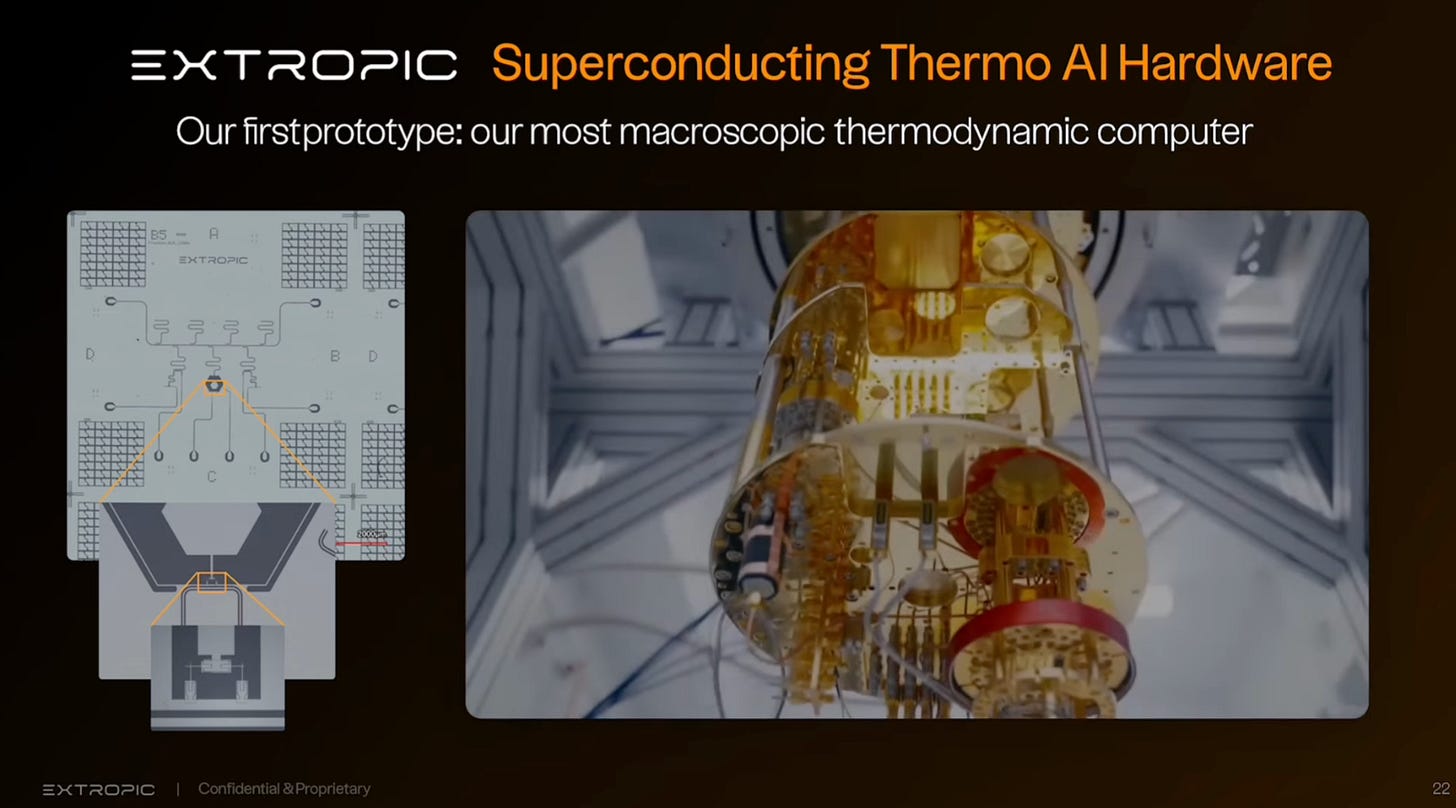

In practice, Extropic is using something called Josephson Junctions (or JJ’s) to build probabilistic bits that are very fast. These JJ’s consist of two superconductors separated by a thin insulating layer, and when the energy barrier is low enough, we get fluctuation. They configure it and let it run, and it probabilistically explores multiple states, mimicking a thermodynamic process and the resulting thermodynamic equilibrium is the solution to the problem.

It appears that this computing approach has many advantages for a whole set of modern AI computing problems, for example for diffusion-based models like DALLE3. Extropic reportedly can do diffusion way faster than on a GPU cloud. They can reportedly run transformer models on it as well. According to Extropic, transformers on a thermodynamic computer are up to to 100M times more energy efficient than on a GPU cloud. This is unfortunately not yet the measurement results, but the result of simulations (there’s no paper yet to back up these numbers).

Let me know your thoughts in the comments. I’m personally looking forward to seeing actual measurement results.

My New Course

Understanding the emerging technologies is challenging - that’s what I saw working with investors and executives this year advising them on semiconductor and AI technologies.

Now I’m making it easier than ever for you to invest in your growth. Get 25% off my world-class course Mastering Technology and Investing in Technology with the code “EARLY25”.

What you'll get

Comprehensive understanding of the Semiconductor and AI computing industries, drawing on a decade of my experience.

Presentation of my investment portfolio (average annual return of 40%).

Valuable analytical skills for identifying high-growth opportunities in the technology sector.

8 modules & over 30 lessons - presented by me in a convenient format.

Bonus: A Guide to AI Silicon startups.

Bonus: Access to future course updates (for 4 months).

The first 10 people to sign up for the course will get 25% off with the code “EARLY25”. Looking forward to see you there.

Below, I share my outlook on probabilistic computing:

Keep reading with a 7-day free trial

Subscribe to Deep in Tech Newsletter to keep reading this post and get 7 days of free access to the full post archives.