NVIDIA has announced a major advancement: new optical chips, which marks the shift from electrical to optical interconnect. This technology will define how AI systems are built and scaled over the next decade.

In this newsletter, we will discuss why light is the future of data centers and I’ll walk you through:

The new optical chips

How it works and why it’s a big deal

The next-generation Rubin GPU / NVIDIA roadmap

Why NVIDIA is investing in quantum computing?

Let’s take a closer look.

The Problem

Let’s start with the problem: new reasoning models are disrupting all previous projections for GPU demand. Early large language models (LLMs) were trained to predict the next word. Now, we are seeing the rise of reasoning models like OpenAI's o1 and DeepSeek's R1 — systems that perform multi-step thinking, planning, and autonomous reasoning.

Reasoning is computationally expensive:

It uses up to 20× more tokens per request as models "talk to themselves" during problem-solving.

It demands up to 150× more compute compared to traditional one-shot LLMs.

This shift is what’s driving the surge in demand for compute. It’s no longer enough to have fast GPUs — you need infrastructure that can support massive computation at scale. It's no longer just about compute power — it's about moving data between chips efficiently.

And it's why the industry has been betting heavily on silicon photonics —

replacing copper with light-speed optical interconnects.

The Copper problem

In today’s AI data centers, network switches connect to optical transceivers that translate electrical signals into light for long-distance transmission. However, inside server racks, most connections are still electrical, relying on traditional copper wiring.

Copper is slow and power-hungry. Resistance in copper wires causes energy loss as heat, and moving data can consume up to 70% of a data center's total power — more than the computing itself.

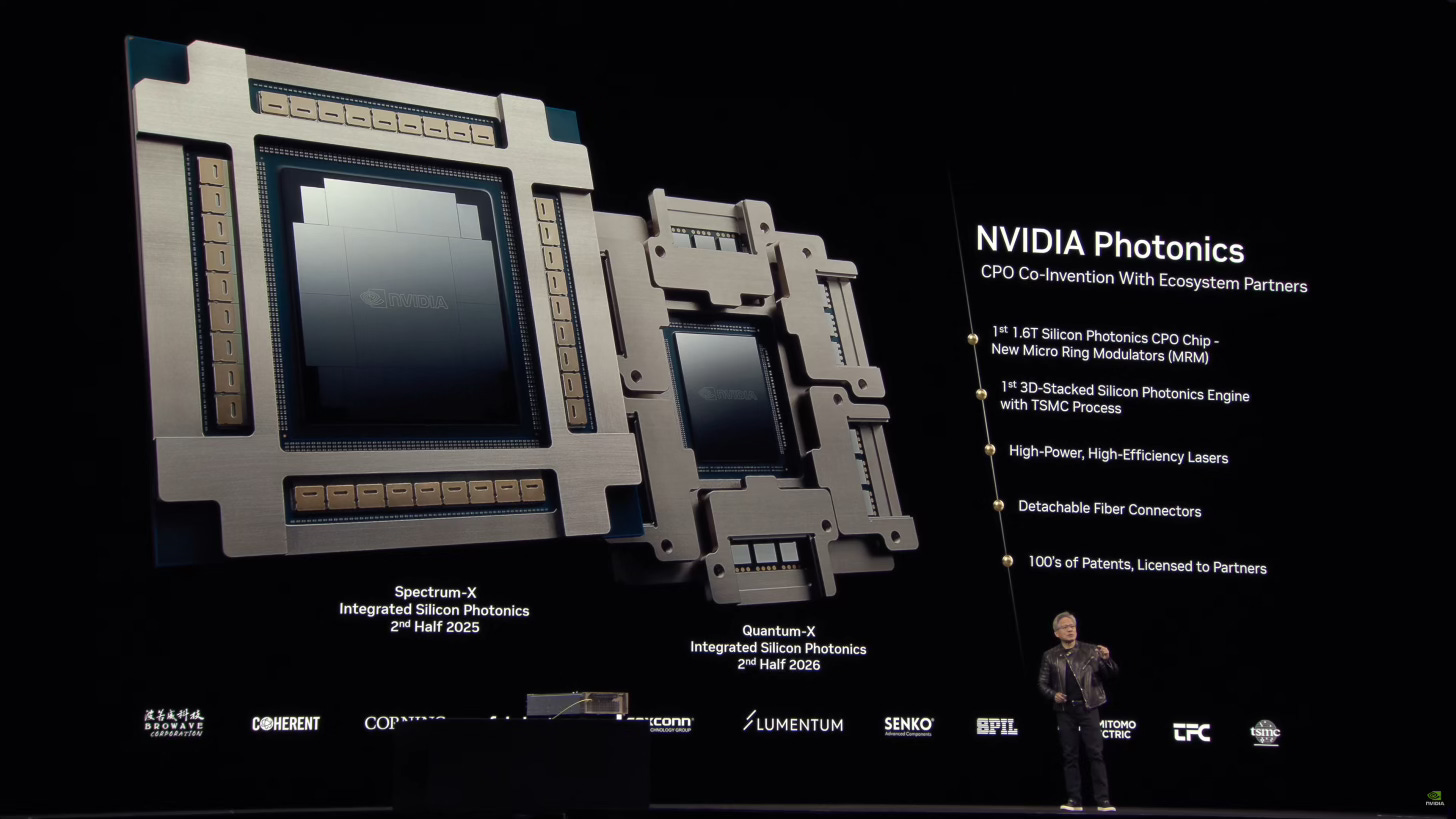

NVIDIA and TSMC have been working to solve this bottleneck. Their solution is a new co-packaged optics: instead of sending electrons through copper, it uses light to move data between GPUs — delivering terabit-per-second speeds, lower power consumption, and longer reach inside data centers.

Why Light?

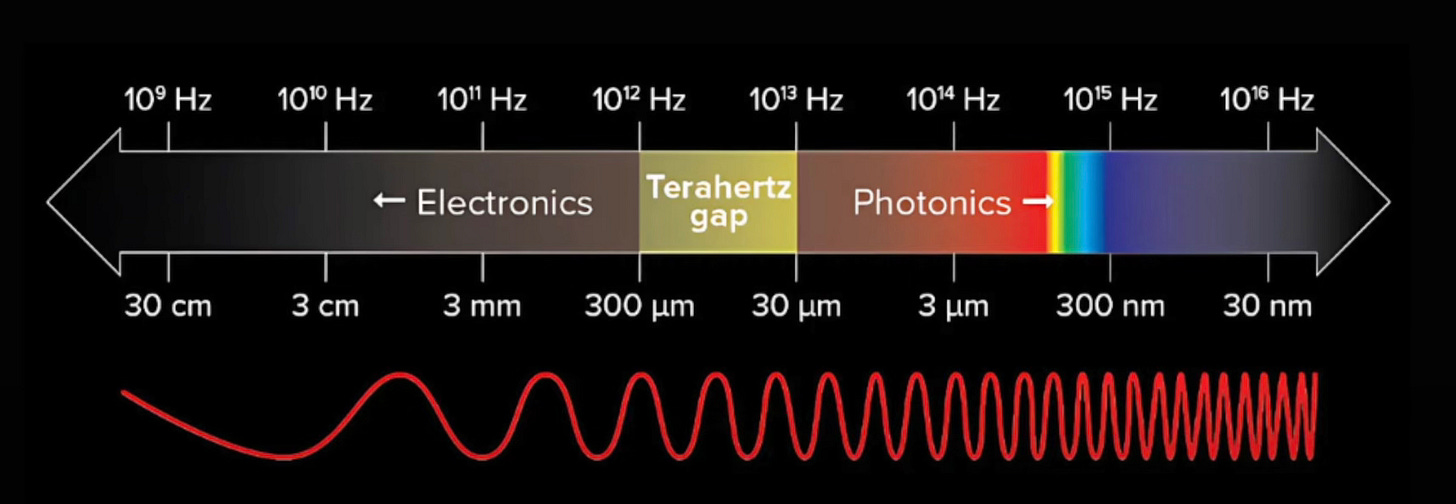

Light operates at extremely high frequencies (~400–750 THz), offering terahertz-scale bandwidth. This allows multiple wavelengths (channels) to transmit data simultaneously through a single fiber, enabling much higher bandwidth than electrical signals — with lower heat and minimal latency.

How the New Optical Chip Works

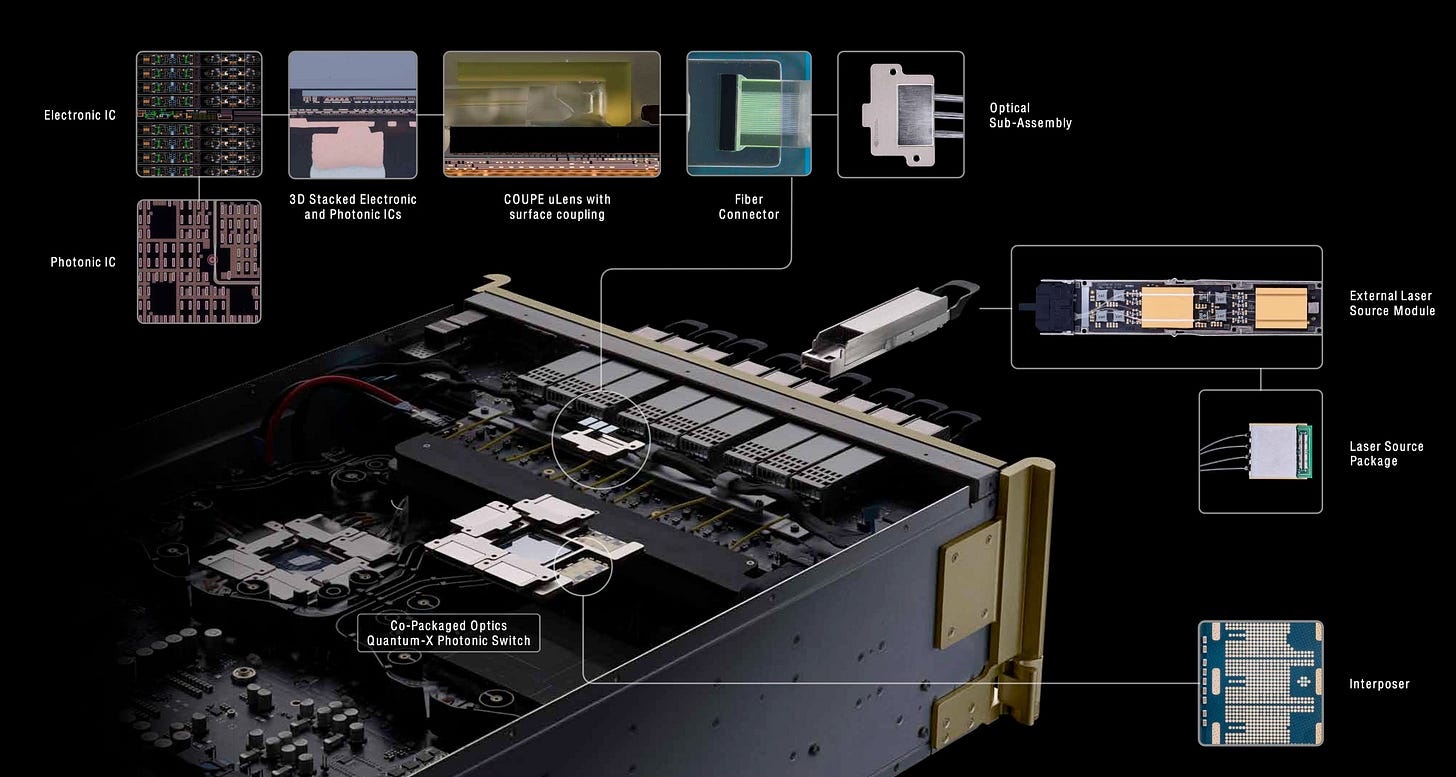

Encoding Data: Integrated lasers generate light beams. Tiny Micro-Ring Modulators (MRMs) encode information by altering light intensity — similar to blinking a flashlight at extreme speeds.

Data Transmission: Light travels through microscopic silicon waveguides, carrying multiple data streams at once.

Decoding Data: Photodetectors capture the light and convert it back into electrical signals for the GPU.

Each optical switch can transfer data at 1.6 terabits per second, enabling extremely fast GPU-to-GPU communication across racks and clusters. I dive deeper into how it works in my video.

TSMC’s Manufacturing Breakthrough

To enable this, TSMC developed COUPE (Compact Universal Photonic Engine), a 3D packaging technology that tightly stacks photonic and electronic circuits together.

The electronic chip (6nm, 220 million transistors) sits on top.

The photonic chip (65nm) contains around 1,000 devices like modulators, waveguides, and detectors.

This tight integration eliminates the delays and inefficiencies of traditional designs, dramatically reducing power consumption and boosting speeds inside AI clusters.

Why it’s a big deal:

- Reduces data center power consumption by 3.5x.

- Reduces human touch & downtime in data centers.

- Enables scaling AI clusters to millions of GPUs.

The first generation of TSMC’s COUPE technology will enter mass production in the second half of 2026, with NVIDIA Rubin Ultra expected to be one of the first adopters.

Below, we discuss the future of silicon photonics, the new Rubin and Rubin Ultra GPUs, NVIDIA’s roadmap for the next years, and why they are investing in quantum computing.

Keep reading with a 7-day free trial

Subscribe to Deep in Tech Newsletter to keep reading this post and get 7 days of free access to the full post archives.